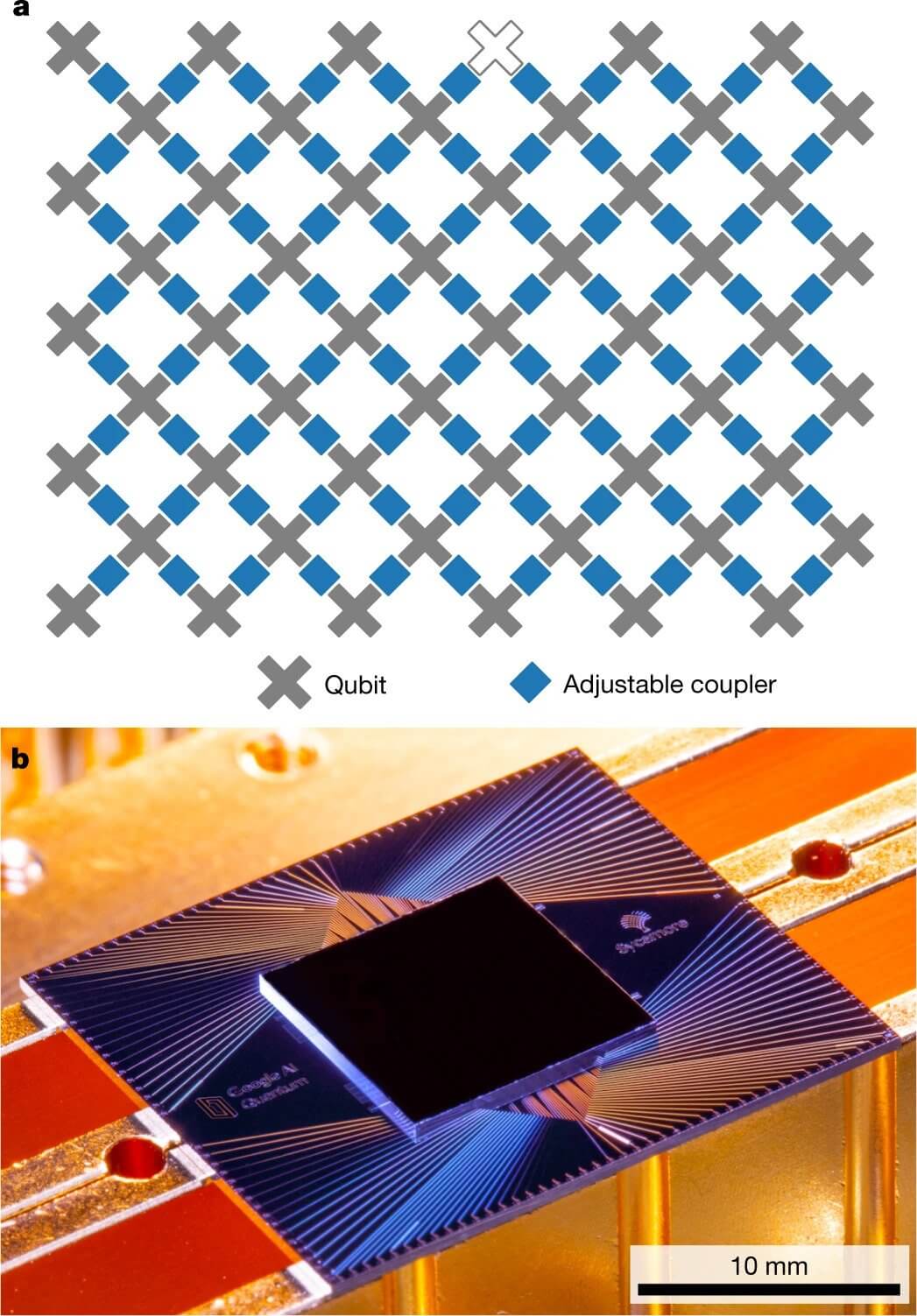

Left: Artist's rendition of the Sycamore processor mounted in the cryostat. Right: Photograph of the Sycamore processor. Image © Google AI Blog

The idea of modern quantum computers began in the early 1980s when physicist Paul Benioff proposed a quantum mechanical model of the Turing machine. Later, Richard Feynman and Yuri Manin suggested that a quantum computer had the potential to simulate things that a classical computer could not. Quantum computers are believed to be able to solve certain computational problems, such as integer factorization (which underlies RSA encryption), significantly faster than classical computers.

Classical computers store information with binary code (distinct 0s and 1s and no superposition of the 1 and 0 states) but for quantum computers, this can be 0s or 1s or they can be a superposition of 1 and 0 states. Quantum computers use quantum bits (qubits) and qubits can represent both a 0 and 1 at the same time. Though quantum computer may work on the state between 1 and 0, when qubits are measured the result is always either a 0 or a 1.

Debates on the power of quantum computing is between us for more than 30 years. Though physicists, front line researchers on a quantum computer, are much hopeful about its development and usefulness, there have always been voices around the corners: will it ever do something useful, and is it worth investing in. Google claims that to answer such corner voices their experiment, referred to as a quantum supremacy experiment, worked as an important milestone.

In a race to achieve quantum supremacy, on 23 October 2019, researchers from Google in partnership with U.S. National Aeronautics and Space Administration (NASA), claimed that they have achieved quantum supremacy. Their research finding was published in the journal Nature on October 23, 2019.

Quantum supremacy is the point at which a quantum computer can outperform a classical computer by solving a problem that no classical computer can feasibly solve (irrespective of the usefulness of the problem). Reaching this regime - quantum supremacy - requires a robust quantum processor, because each additional imperfect operation incessantly makes the overall performance weaker and ineffective.

The team at Google developed a new 54-qubit processor, named “Sycamore”. They claimed that Sycamore performed the target computation in 200 seconds, and from measurements, in an experiment, they determined that it would take the world’s fastest supercomputer 10,000 years to produce similar output.

The processor designed by researchers at Google uses transmon qubits. A transmon is a type of superconducting charge qubit. In this type of superconducting circuit, conduction electrons condense into a macroscopic quantum state, such that currents and voltages behave quantum mechanically. The qubit is encoded as the two lowest quantum eigenstates of the resonant circuit.

Each transmon has two controls: a microwave drive to excite the qubit, and a magnetic flux control to tune the frequency. Each qubit is connected to a linear resonator used to read out the qubit state and each qubit is also connected to its neighboring qubits using a new adjustable coupler. Here the designed coupler allows them to quickly tune the qubit–qubit coupling from completely off to 40 MHz. One qubit did not function properly, so the device uses 53 qubits and 86 couplers.

The processor is then fabricated by using aluminum and Josephson junctions, and indium. To reduce ambient thermal energy to well below the qubit energy, the chip was wire-bonded to a superconducting circuit board and cooled to below 20 mK (mK = millikelvin, 1 millikelvin = 10-3 kelvins) in a dilution refrigerator. The processor is then connected through filters and attenuators to room-temperature electronics which synthesize the control signals.

To verify that the designed system is working they checked the performance of the quantum computer using classical simulations and compared them with a theoretical model. Once the system was verified as working, they ran random hard circuits with 53 qubits and increasing depth (which was kept constant earlier), until reaching the point where classical simulation became infeasible.

The team performed the quantum supremacy experiment on a fully programmable 54-qubit processor – Sycamore. Sycamore comprised of a two-dimensional grid where each qubit is connected to four other qubits. To be sure that the chip has enough connectivity that the qubit states quickly interact throughout the entire processor, Sycamore was comprised of a two-dimensional grid where each qubit is connected to four other qubits. This architecture is also forward compatible, also called upward compatible, for the implementation of quantum error-correction that is this design can fit with planned future versions of itself.

To demonstrate quantum supremacy, they compared the newly designed quantum processor against the latest classical computers in the task of sampling the output of a pseudo-random quantum circuit. Also, to verify that the designed quantum processor is working properly they used a protocol known as cross-entropy benchmarking. This cross-entropy benchmarking compares how often each bitstring is observed experimentally with its corresponding ideal probability computed via simulation on a classical computer. For a given circuit, the team collected the measured bitstrings (they denote it by {xi}) and compute the linear cross-entropy benchmarking fidelity. A computer scientist, Bill Fefferman of the University of Chicago said, "It's literally as if the code of their program was chosen randomly."

The new quantum processor took one million samples in 200 seconds to reach a cross-entropy benchmarking fidelity of 0.8%. Similarly, 0.8% fidelity on a classical computer (containing about one million processor cores) took 130 seconds, and a precise classical verification that is to reach 100% fidelity, it took 5 hours.

Researchers at Google also used a broader set of two-qubit gates to spread entanglement more widely across the full 53-qubit processor and increased the number of cycles from 14 to 20 and when 53 qubits were operating over 20 cycles, the cross-entropy benchmarking fidelity calculated using these proxies remained greater than 0.1%. Given the immense disparity in physical resources, these results already show a clear advantage of quantum hardware over its classical counterpart.

They mentioned that the success of the quantum supremacy experiment was due to their improved two-qubit gates with enhanced parallelism. Through this, they reliably achieved record performance even when operating many gates simultaneously. Further, to achieve this, they used a new type of control knob that is able to turn off interactions between neighboring qubits and this greatly reduces the errors in such a multi-connected qubit system. The team made further performance gains by optimizing the chip design to lower crosstalk, and by developing new control calibrations that avoid qubit defects.

They said that quantum processors based on superconducting qubits can now perform computations in a Hilbert space of dimension 253 ≈ 9 × 1015, beyond the reach of the fastest classical supercomputers available today. This clearly demonstrates the quantum supremacy over today’s leading classical algorithms and is truly a remarkable achievement and a milestone for quantum computing. This also debunks the corner voices and the criticisms about the future of computing technology, its immense power, and also about its validity in the real world.

In the future, they expect that their computational power will continue to grow at a double-exponential rate. To sustain the double-exponential growth rate and to eventually offer the computational volume needed to run well-known quantum algorithms, such as the Shor or Grover algorithms, they are aware that the engineering of quantum error correction will need to become a focus of attention. Also, there remains much work before quantum computers become a practical reality, and researchers will need to demonstrate robust protocols for quantum error correction that will enable sustained, fault-tolerant operation in the longer term.