This Method Verify Whether Quantum Chips are Accurately Executing Operations

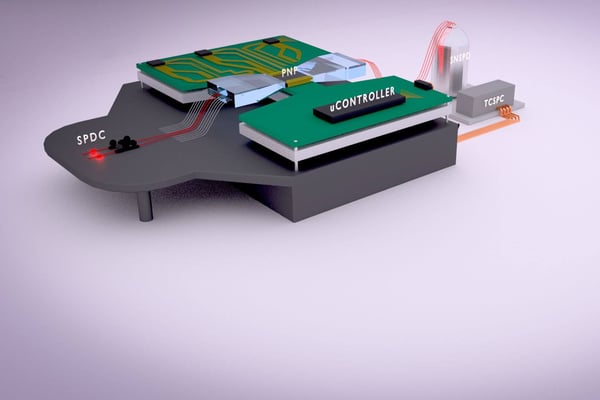

Researchers from MIT, Google, and elsewhere have designed a novel method for verifying when quantum processors have accurately performed complex computations that classical computers can’t. They validate their method on a custom system (pictured) that’s able to capture how accurately a photonic chip (PNP) computed a notoriously difficult quantum problem. Image © Mihika Prabhu.

Last year on October 23, 2019, a research paper was published in the journal Nature stating that the quantum speedup is achievable in a real-world system. Quantum computer is superior to classical computers that work on the principle of binary code; 0 and 1. Quantum computers use quantum bits (qubits) and qubits can represent both a 0 and 1 at the same time. Though a quantum computer may work on the state between 1 and 0, when qubits are measured the result is always either a 0 or a 1.

But how do we verify that the quantum chip- a crucial technical part of a quantum computer, is working correctly?

But not to worry, a team of researchers from MIT has described a novel protocol to efficiently verify that a Noisy Intermediate Scale Quantum (NISQ) chip has performed all the right quantum operations. The research work was published in the journal Nature Physics.

Quantum chips perform computations using quantum bits, called qubits. Qubits can represent the two states corresponding to classic binary bits - a 0 or 1 - or both states simultaneously called the quantum superposition of both states. The unique superposition state is where the quantum computer shows its prowess: superposition states enable quantum computers to solve complex problems that are practically impossible for classical computers and potentially serve as a breakthrough in material design, drug discovery, and machine learning, among other applications.

But achieving a fully workable quantum computer is not an easy task; full-scale quantum computers will require millions of qubits, which isn’t yet feasible. Scientists have been working on designing feasible quantum chips for the past years and recently they are busy developing Noisy Intermediate Scale Quantum (NISQ) chips that can contain around 50 to 100 qubits. The chip’s outputs can look entirely random, so it takes a long time to simulate steps to determine if everything went according to plan. And here the team validates their protocol on a notoriously difficult quantum problem running on a custom quantum photonic chip and successfully described a novel protocol to efficiently verify that a NISQ chip has performed all the right quantum operations indicating that they just do not operate randomly.

Jacques Carolan, first author and a postdoc in the Department of Electrical Engineering and Computer Science (EECS) and the Research Laboratory of Electronics (RLE) said, “As rapid advances in industry and academia bring us to the cusp of quantum machines that can outperform classical machines, the task of quantum verification becomes time-critical. Our technique provides an important tool for verifying a broad class of quantum systems. Because if I invest billions of dollars to build a quantum chip, it sure better do something interesting.”

The basic idea of testing the quantum chip was easy; they just fed an output quantum state generated by the quantum circuit back to a known input state.

By this, they were able to diagnose which circuit operations were performed on the input to produce the output since those operations should always match what researchers programmed and if not, the researchers can use the information to pinpoint where things went wrong on the chip.

The main idea of the new protocol was to divide and conquer where instead of doing whole thing in one shot, which takes a very long time, they do this unscrambling layer by layer. This divide and conquer rule allowed researchers to break problems and tackle them in a more efficient way.

The idea of divide and conquer was inspired by the working of neural networks - which solve problems through many layers of computation - and successfully build a novel quantum neural network (QNN) where each layer represents a set of quantum operations.

To run the QNN, they used traditional silicon fabrication techniques to build a 2-by-5-millimetre NISQ chip with more than 170 control parameters. The control parameters were tunable circuit components and can manipulate the photon path easier. Then a pair of photons having specific wavelengths were generated from an external component and injected into the chip and those photons travel through the chip’s phase shifters. The phase shifter was used to change the path of photons. This phenomenon eventually produces a random quantum output state which represents what would happen during computation. Now the output was measured by an array of external photodetector sensors.

Then the output was sent to QNN where the first layer uses complex optimization techniques to dig through the noisy output and pinpoint the signature of a single photon among all those scrambled together. Then as required it unscrambles that single photon from the group to identify what circuit operations return it to its known input state. Those operations should match exactly the circuit’s specific design for the task. Here all the subsequent layers do the same computation - removing from the equation any previously unscrambled photons - until all photons are unscrambled.

For instance, let’s say the input state of qubits fed into the processor was all zeroes and the NISQ chip executes a bunch of operations on the qubits to generate a massive, seemingly randomly changing number as output. An output number will constantly be changing as it’s in a quantum superposition. Layer by layer now the QNN selects chunks of that massive number and then and determines which operations revert each qubit back down to its input state of zero. If any operations are different from the originally planned operations, then researchers will know that something has gone awry. Researchers can inspect any mismatches between the expected output to input states, and use that information to tweak the circuit design.

Researchers were able to unsample two photons that had run through the boson sampling problem on their custom NISQ chip - and in a fraction of time it would take traditional verification approaches and also claimed that in addition for quantum verification purposes, this process helps to capture useful physical properties.